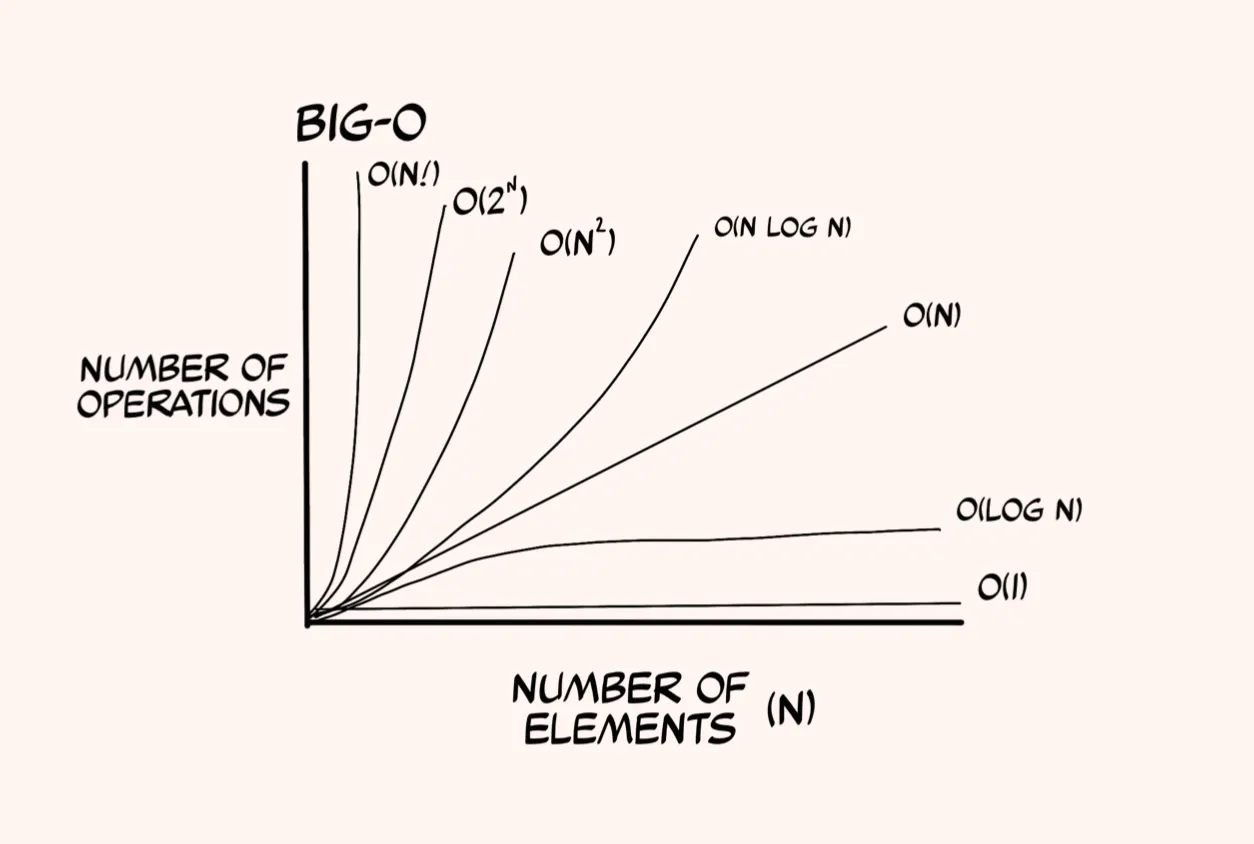

Big O Notation

Notable resource: big o cheatsheet

Big O notation is a notation we use to estimate runtime complexity of an algorithm.

O = number of operations

n = number of elements

A few key takeaways about runtime complexity:

- Generally, when we use Big O, we’re estimating for the worst case scenario.

- Big O is used to estimate how the complexity growths with respect to the input, not with respect to the algorithm.

- There are no constants in big O. E.g., never “10% of the input”, never half the input.

Constant time

O(1) — Will take the same amount of time to execute regardless of the amount of data. Note, this doesn’t mean we only do one thing, it simply means we take the same amount of time regardless of the input. For example, reading an index from an array takes the same amount of time regardless of if an array is 10 items or 30000 items.

Logarithmic time

O(log n) — logarithmic in nature, the size of the workload decreases by half each time it runs. Binary search.

Linear Time

O(n) — grows linearly and proportionally to the size of the input. For example, a for loop.

Super-linear time

O(n log n) — is less performant than a linear algorithm but more perofrmant than an exponential algorithm.

Polynomial time

O(n2) — describes an algorithm where the performance is directly proportional to the square of the data size. Commonly found in algorithms using two nested for-loops, like bubble sort.

Exponential time

(O2n) — Exponential time algorithms double with each new data point. For example, recursive Fibonacci implementation.

Factorial time

O(N!) — the worst performing time complexity, grows rapidly as the size of n increases. Travelling salesman problem.

TIPS AND TRICKS

Some shorthand and tips and tricks.

- If the input halves everytime, it’s probably logarithmic O(log n) (or super-linear time).

- If you see a loop, think linear time. Loop -> linear O(N).

Last modified:

Last modified:  Last modified:

Last modified: